NavFoM: World's First Cross-Entity Navigation AI Model Launched

NavFoM: A Breakthrough in Unified Robot Navigation

In a significant advancement for robotics and AI, Galaxy General has partnered with research teams from Peking University, the University of Adelaide, and Zhejiang University to launch NavFoM (Navigation Foundation Model), the world's first cross-ontology full-scene panoramic navigation foundation model.

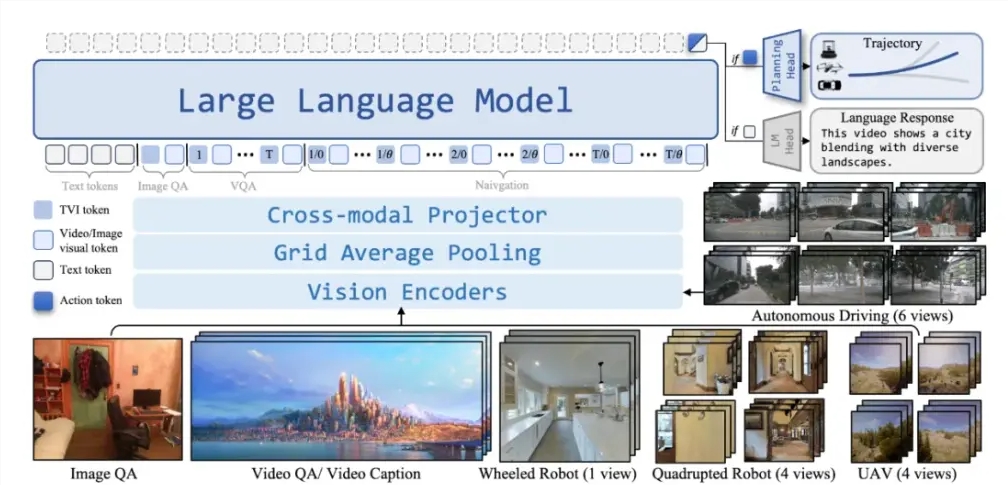

Unified Framework for Diverse Navigation Tasks

The innovative model represents a paradigm shift by integrating various robot navigation tasks into a single framework. This includes:

- Visual and language navigation

- Goal-oriented navigation

- Visual tracking

- Autonomous driving applications

Dr. Chen Wei, lead researcher at Galaxy General, explains: "NavFoM eliminates the need for specialized models for each navigation task. Our approach mirrors how humans use the same cognitive framework to navigate different environments."

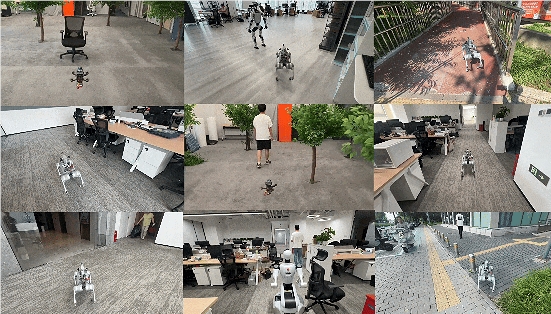

Zero-Shot Operation Across Environments

One of NavFoM's most remarkable features is its full-scenario support capability. The model can operate in both indoor and outdoor environments without prior knowledge or mapping requirements. This means:

- No additional data collection needed for new environments

- Immediate deployment in unseen locations

- Reduced setup time and costs for implementation

The system achieves this through advanced machine learning techniques that allow it to generalize from its training to novel situations.

Multi-Task Support Through Natural Language

The model's multi-task support capabilities enable diverse functions through natural language instructions, including:

- Target following

- Autonomous navigation

- Complex route planning This flexibility allows various robotic platforms - from robotic dogs to drones and autonomous vehicles - to operate efficiently within the same framework.

Technical Innovations: TVI Tokens and BATS Strategy

The research team introduced two groundbreaking technical components:

- TVI Tokens (Temporal-Viewpoint-Indexed Tokens): Enables the model to understand temporal sequences and directional information critical for navigation tasks.

- BATS strategy (Budget-Aware Token Sampling): Allows optimal performance even with limited computational resources, making the model practical for real-world applications.

The team compiled an unprecedented training dataset containing:

- 8 million cross-task, cross-ontology navigation data points

- 4 million open-ended question-and-answer pairs This represents twice the training volume of previous models in this field.

Future Applications and Development

The release of NavFoM opens new possibilities for robotics development. According to Professor Li Ming of Peking University: "Developers can now build specialized applications on this foundation model through transfer learning, significantly reducing development time while improving performance." Potential applications span:

- Smart city infrastructure

- Search and rescue operations

- Industrial automation

- Personal assistance robotics

The research team plans to release an open-source version of NavFoM later this year to accelerate innovation in the field.

Key Points:

🌟 First unified navigation model combining multiple robot tasks under one framework

🏞️ Zero-shot operation in both indoor/outdoor environments without prior mapping

💬 Natural language control enables intuitive human-machine interaction

💡 TVI Tokens & BATS strategy provide technical advantages in understanding and resource management

📊 Unprecedented training dataset with 12 million data points ensures robust performance