Meta AI Launches MobileLLM-R1: A Lightweight Edge AI Model

Meta AI Introduces MobileLLM-R1 for Edge Inference

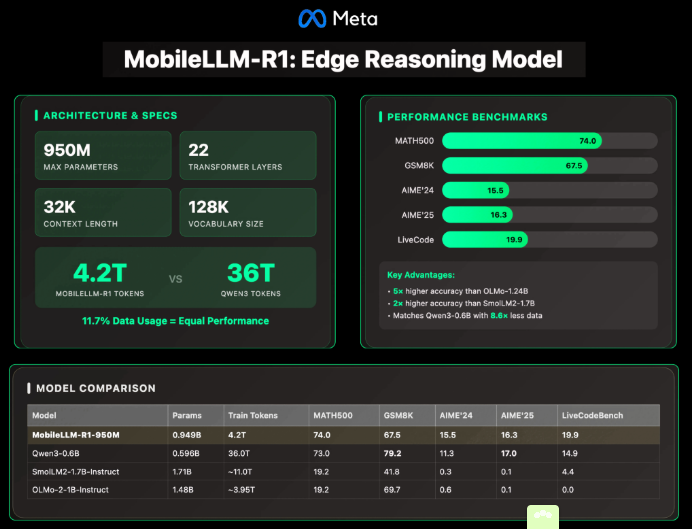

Meta AI has unveiled MobileLLM-R1, a new series of lightweight edge inference models now available on Hugging Face. These models, with parameters ranging from 140 million to 950 million, are optimized for efficient mathematical, coding, and scientific reasoning tasks while maintaining high performance with fewer than 1 billion parameters.

Architectural Innovations

The flagship model in the series, MobileLLM-R1-950M, incorporates several architectural optimizations:

- 22-layer Transformer structure with 24 attention heads and 6 grouped KV heads

- Embedding dimension of 1536 and hidden layer dimension of 6144

- Grouped query attention (GQA) to reduce computational and memory demands

- Block-level weight sharing to minimize parameters without increasing latency

- SwiGLU activation function to enhance small model representation

The model supports a 4K context length, with a post-training extension to 32K.

Training Efficiency Breakthrough

MobileLLM-R1 demonstrates remarkable training efficiency:

- Trained on approximately 4.2 trillion tokens

- Uses only 11.7% of the data compared to Qwen3's 0.6B model (trained on 36 trillion tokens)

- Achieves comparable or superior accuracy to Qwen3 despite reduced training data

The model was fine-tuned on supervised datasets for mathematical, coding, and reasoning tasks, significantly lowering both training costs and resource requirements.

Benchmark Performance

In comprehensive testing, MobileLLM-R1-950M showed exceptional results:

- On the MATH500 dataset:

- ~5x more accurate than OLMo-1.24B

- ~2x more accurate than SmolLM2-1.7B

- Matched or surpassed Qwen3-0.6B on:

- GSM8K (reasoning)

- AIME (mathematics)

- LiveCodeBench (coding)

These achievements are particularly notable given the model's significantly smaller token consumption compared to its competitors.

Limitations and Considerations

The specialized focus of MobileLLM-R1 comes with certain trade-offs:

- Performance lags behind larger models in:

- General conversation

- Common-sense reasoning

- Creative tasks

- Usage is restricted by Meta's FAIR NC (non-commercial) license for production environments

- The extended 32K context increases key-value cache and memory demands during inference

Industry Implications

The introduction of MobileLLM-R1 signals a growing trend toward smaller, specialized models that can deliver competitive reasoning capabilities without requiring massive training budgets. These models set new standards for deploying large language models on edge devices, particularly in mathematical, coding, and scientific applications.

The project is available at: https://huggingface.co/facebook/MobileLLM-R1-950M

Key Points:

✅ New Model Release: Meta AI's MobileLLM-R1 series offers lightweight edge inference with parameters from 140M to 950M. ✅ Training Efficiency: Achieves superior performance using just ~11.7% of typical training data. ✅ Performance Gains: Outperforms larger open-source models in mathematical and coding benchmarks.