IBM Unveils Granite4.0Nano Series for Edge AI

IBM Introduces Compact AI Models for Edge Computing

IBM's AI team has unveiled the Granite4.0Nano series, a family of small-scale artificial intelligence models specifically designed for local and edge inference applications. This release marks a significant step in bringing powerful AI capabilities to resource-constrained environments while maintaining enterprise-grade control and open-source accessibility.

Model Architecture and Features

The series comprises eight distinct models offered in two primary sizes: 350 million and approximately 1 billion parameters. These models employ an innovative hybrid architecture that combines State Space Models (SSM) with traditional transformer layers, offering a balance between efficiency and performance.

Notable variants include:

- Granite4.0H1B: Hybrid SSM architecture with ~1.5B parameters

- Granite4.0H350M: Hybrid approach with 350M parameters

- Transformer-only versions for maximum compatibility

The hybrid design alternates between SSM and transformer layers, providing significant advantages in memory efficiency compared to pure transformer models while maintaining the versatility of transformer modules.

Training and Performance

IBM maintained rigorous training standards for these compact models, utilizing the same methodology employed for their larger Granite4.0 counterparts. The models were trained on an extensive dataset exceeding 15 trillion tokens, followed by specialized instruction tuning to enhance:

- Tool usage capabilities

- Instruction following accuracy

- General task performance

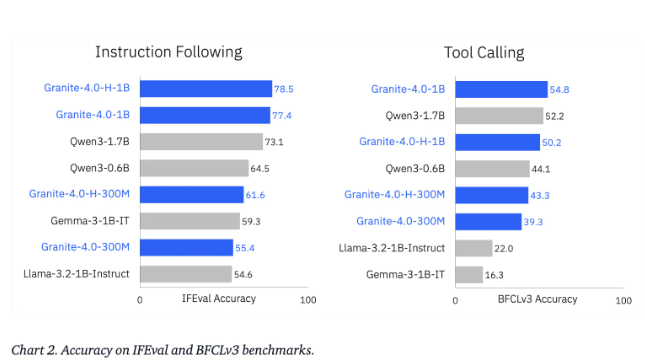

Comparative benchmarks against competing models like Qwen, Gemma, and LiquidAI LFM demonstrate Granite4.0Nano's superior performance in:

- General knowledge tasks

- Mathematical computations

- Coding applications

- Security-related functions The series also excels in agent tasks, as evidenced by strong showings on the IFEval and Berkeley function call leaderboard version 3.

Enterprise-Grade Deployment

All Granite4.0Nano models come with:

- Apache2.0 license for open-source use

- ISO42001 certification for quality assurance

- Cryptographic signatures for traceability

The models support deployment across various environments including:

- Edge devices

- Local servers

- Browser-based applications Through popular runtime platforms such as:

- vLLM

- llama.cpp

- MLX

Developers can access these models through Hugging Face and IBM's watsonx.ai platform, enabling seamless integration into existing workflows.

Key Points:

🔹 IBM's Granite4.0Nano series offers eight compact AI models for edge computing (350M to 1B parameters) 🔹 Hybrid SSM-transformer architecture provides memory efficiency without sacrificing capability 🔹 Trained on >15 trillion tokens with instruction tuning for enhanced performance 🔹 Enterprise-ready with ISO certification and cryptographic signatures 🔹 Available under Apache2.0 license with multi-platform runtime support