vLLM-Omni Breaks Barriers with Multi-Modal AI Processing

vLLM-Omni Ushers in New Era of Multi-Modal AI

At a tech showcase that had developers buzzing, the vLLM team pulled back the curtain on their latest innovation: vLLM-Omni. This isn't just another incremental update—it's a complete reimagining of how AI systems can process multiple data types simultaneously.

Beyond Text: A Framework for All Media

While most language models still operate in the text-only realm, modern applications demand much more. Imagine an AI assistant that doesn't just read your messages but understands the photos you share, analyzes voice notes, and even generates video responses. That's precisely the future vLLM-Omni is building toward.

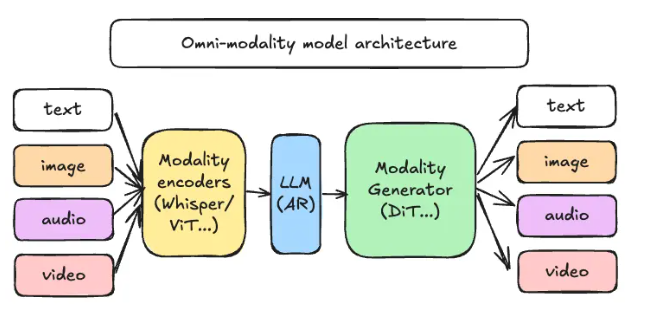

The framework's secret sauce lies in its decoupled pipeline architecture, which works like a well-organized factory assembly line:

- Modal Encoder: Translates images, audio clips or video frames into machine-readable vectors

- LLM Core: The brain handling traditional language tasks and conversations

- Modal Generator: Crafts rich media outputs from simple text prompts

Practical Benefits for Developers

What does this mean for engineering teams? Flexibility and efficiency. Resources can be scaled independently for each processing stage—no more wasting GPU power on components running idle. During our demo, we watched as the system dynamically shifted computing power between analyzing an image and generating accompanying narration.

The GitHub repository already shows promising activity, with early adopters experimenting with creative applications from automated video editing to interactive educational tools.

"We're seeing demand explode for models that understand context across multiple media types," explained lead engineer Maya Chen. "vLLM-Omni gives developers the toolkit to meet that demand without reinventing the wheel each time."

Key Points:

- 🚀 True multi-modal processing handles text, images, audio and video seamlessly

- ⚙️ Modular architecture allows precise resource allocation

- 🌍 Open-source availability invites global collaboration

- 🏗️ Scalable design adapts to diverse application needs