Study Warns: Overuse of AI May Harm Critical Thinking

MIT Study Reveals Cognitive Costs of AI Dependency

New research from the MIT Media Lab warns that widespread use of large language models (LLMs) like ChatGPT may come with significant cognitive tradeoffs. The study, led by Nataliya Kosmyna, examines how AI assistance affects learning processes during academic writing tasks.

Research Methodology

The team conducted experiments with 54 participants divided into three groups:

- LLM group: Used only ChatGPT for writing

- Search engine group: Used traditional search tools (no LLMs)

- Pure mental effort group: Used no digital tools

Researchers monitored brain activity via EEG scans while participants completed writing tasks. The study included four sessions with role reversals in the final round, where some participants switched between tool-dependent and tool-free approaches.

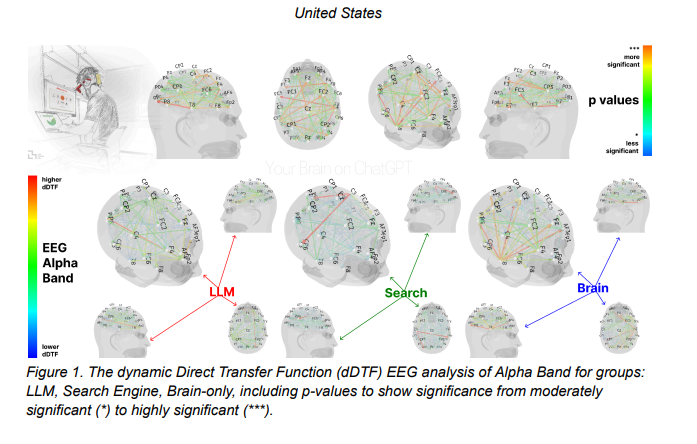

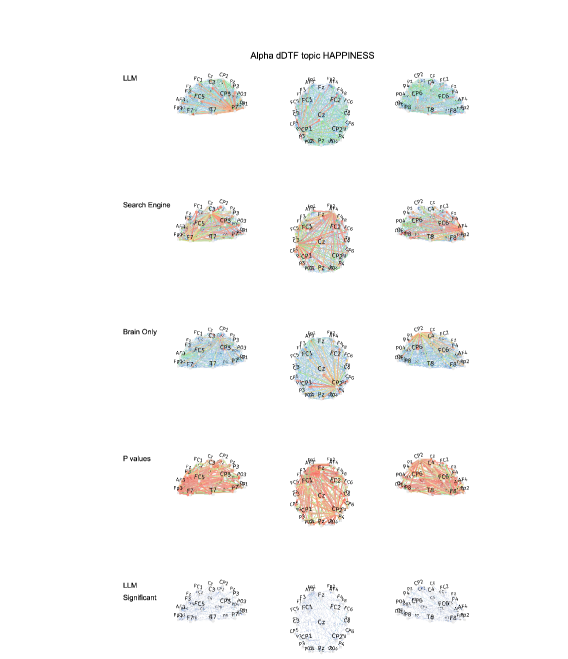

Key Findings: Neural Impact of AI Use

The study revealed striking differences in brain connectivity patterns:

- Strongest connectivity appeared in the pure mental effort group

- Moderate connectivity occurred in search engine users

- Weakest overall coupling was found among LLM users

Participants transitioning from AI assistance to unaided work showed particularly concerning results:

- Reduced alpha wave activity (linked to creativity and semantic processing)

- Diminished beta wave patterns (associated with focused attention)

- Impaired episodic memory consolidation

"These findings suggest AI reliance may create passive learning methods that weaken critical thinking," explains Kosmyna. "We're seeing what we term 'cognitive debt' - short-term efficiency gains at the expense of long-term cognitive development."

Educational Implications and Recommendations

The research highlights several concerns for academic environments:

- Memory encoding: LLM users showed difficulty recalling their own written content

- Ownership perception: AI-assisted writers felt less connection to their work

- Cognitive agency: Traditional methods fostered deeper engagement with material

The team recommends balanced approaches:

- Implementing "tool-free" learning phases before introducing AI assistance

- Using AI selectively after establishing foundational neural networks

- Developing methods to distinguish human-authored from AI-generated content

The study concludes that while LLMs offer undeniable productivity benefits, educators must carefully consider their integration to preserve critical thinking skills and knowledge retention.

Key Points:

- Brain scans show weaker connectivity in frequent AI users

- Memory encoding suffers when relying on LLMs for writing tasks

- Cognitive debt accumulates through repeated AI dependency

- Balanced educational approaches may mitigate negative effects

- Ownership perception declines with increased AI assistance