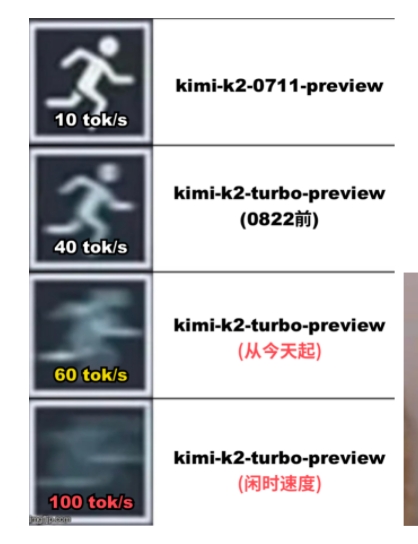

Moonshot AI Boosts Kimi-K2 Model Speed to 60 Tokens per Second

Moonshot AI Announces Major Speed Upgrade for Kimi-K2 Model

August 22, 2025 - Moonshot AI has achieved a breakthrough in artificial intelligence processing speeds with its latest upgrade to the Kimi-K2-Turbo-Preview model. The company announced today that the model now delivers 60 tokens per second in standard operation, with peak performance reaching 100 tokens per second.

Technical Milestone for AI Processing

The engineering team at Moonshot AI has successfully optimized the model's architecture to achieve these remarkable speed improvements. This advancement represents a 40% increase over previous benchmarks, significantly reducing latency in AI-generated responses.

Pricing and Availability

While delivering these performance gains, Moonshot AI continues to offer the Kimi-K2-Turbo-Preview at special promotional rates:

- Input pricing (cache hit): ¥2.00 per million tokens

- Input pricing (cache miss): ¥8.00 per million tokens

- Output pricing: ¥32.00 per million tokens

These discounted rates will remain in effect until September 1st, after which standard pricing will resume.

Future Development Roadmap

In their official statement, Moonshot AI expressed gratitude for user support and outlined ongoing development plans:

"We remain committed to continuous improvement of the Kimi K2 model's performance. Our team is already working on further optimizations that will push the boundaries of what's possible in AI response times."

The company encourages interested users to visit their official platform for detailed technical specifications and implementation guides.

Industry Impact

This speed enhancement positions the Kimi K2 model as one of the fastest commercially available AI solutions. The improvement is particularly significant for:

- Real-time translation services

- Content generation platforms

- Customer support automation

- Data analysis applications

Key Points:

- Performance boost: Kimi-K2-Turbo-Preview now processes 60 tokens/sec (100 peak)

- Pricing promotion: Special rates available until September 1st

- User benefits: Reduced latency improves interactive experiences

- Future focus: Continued optimization planned for coming months