Fine-Tuning AI Models Without the Coding Headache

The Fine-Tuning Dilemma

AI models like ChatGPT and LLaMA have moved from novelty to necessity in workplaces worldwide. Yet many teams hit the same wall - these jack-of-all-trades models often stumble when faced with industry-specific tasks.

"It's like having a brilliant intern who keeps missing the point," says one developer we spoke with. "The model can discuss quantum physics but fails at our basic product questions."

Traditional solutions come with steep barriers:

- Setup nightmares: Days lost configuring dependencies

- Budget busters: GPU costs running thousands per experiment

- Parameter paralysis: Newcomers drowning in technical jargon

Enter LLaMA-Factory Online

This collaboration with the popular open-source project transforms fine-tuning from a coding marathon into something resembling online shopping. The platform offers:

- Visual workflows replacing code scripts

- Pre-configured cloud GPUs available on demand

- Full training pipelines from data prep to evaluation

"We cut our development time by two-thirds," reports a smart home tech lead who used the platform. "What used to take weeks now happens before lunch."

Why It Works

The secret sauce lies in four key ingredients:

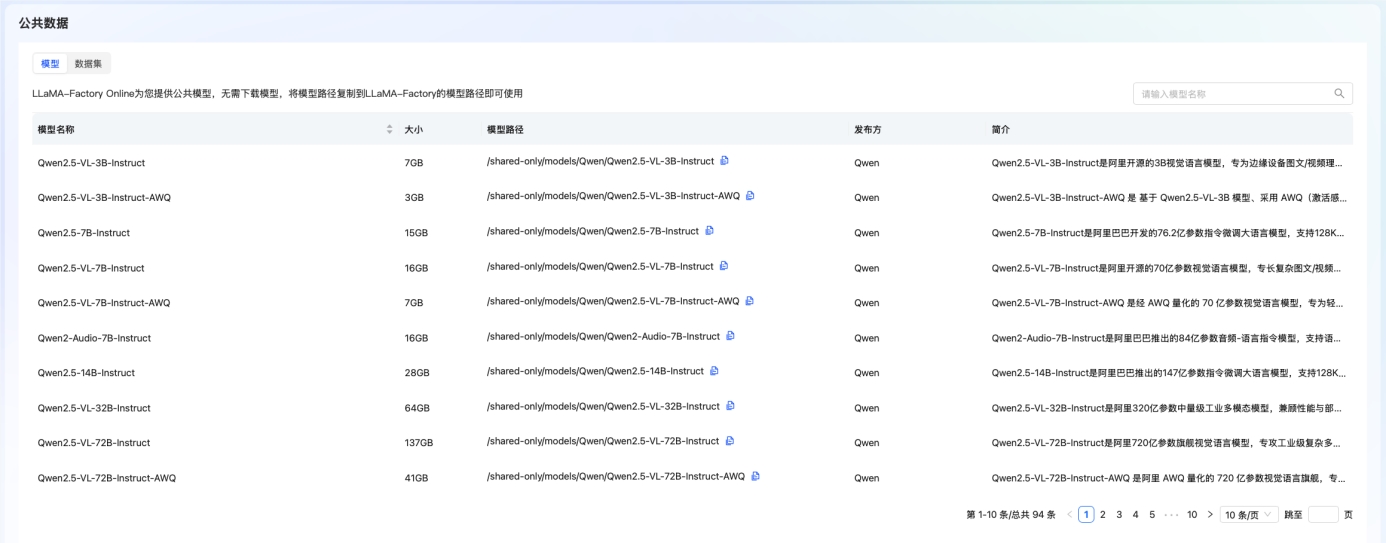

- Model Buffet: Over 100 pre-loaded models including LLaMA, Qwen, and Mistral - plus your own private options.

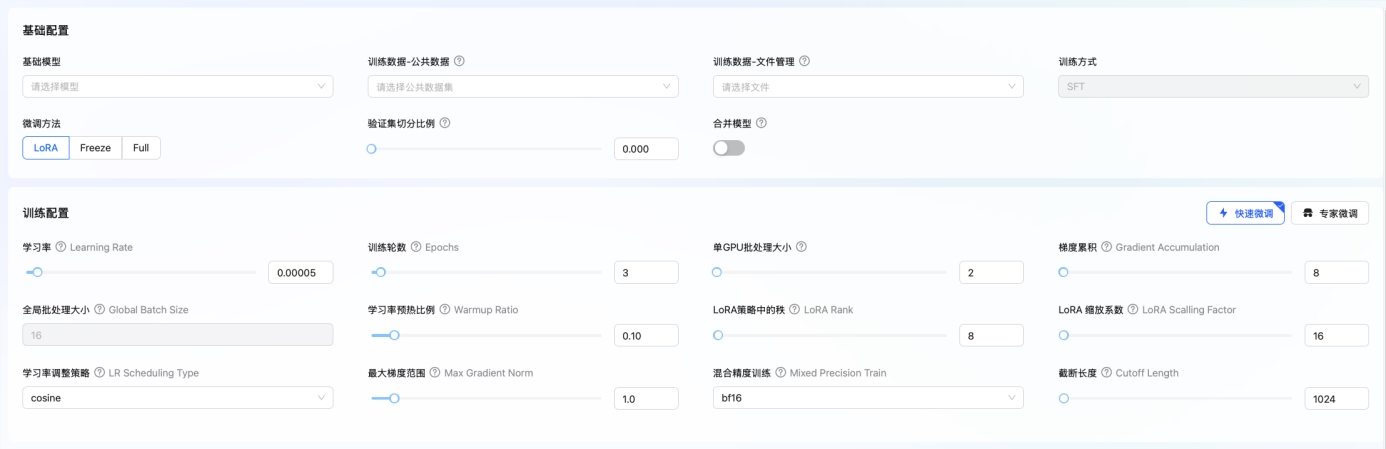

- Flexible Training: Choose quick LoRA tweaks or deep full-model tuning as needed.

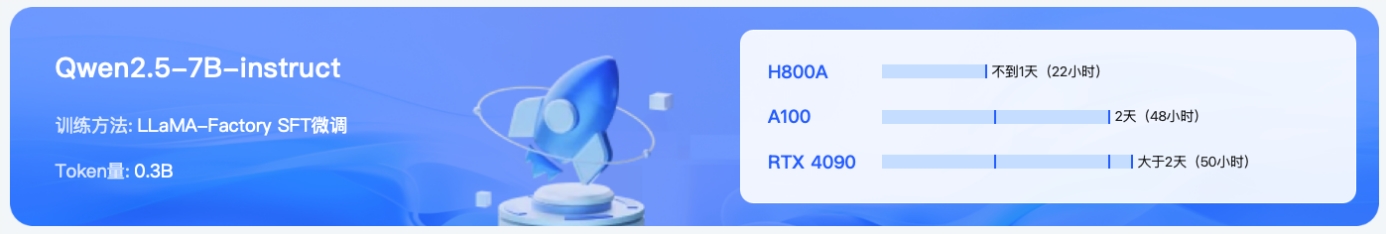

- Smart Resource Use: Pay-as-you-go GPU access with intelligent scheduling options.

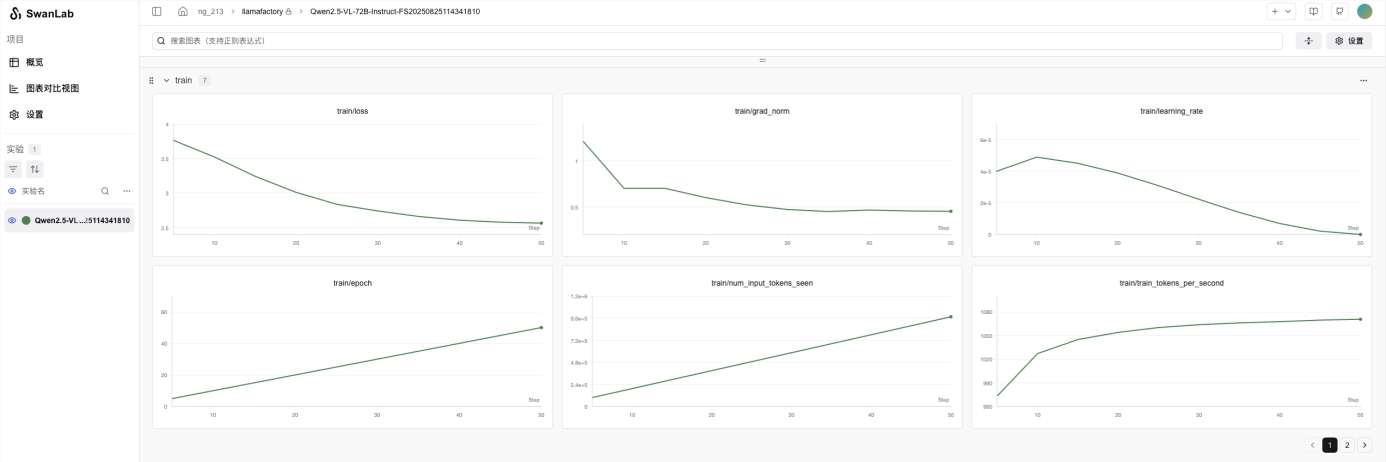

- Transparent Tracking: Real-time monitoring tools to catch issues early.

Case Study: Smarter Homes, Faster

The smart home team's journey illustrates the platform's power:

- Selected Qwen3-4B as their base model after efficiency tests

- Processed 10,000+ command samples through the visual interface

- Fine-tuned using LoRA parameters adjusted via sliders (no coding)

- Achieved 50%+ accuracy gains in just 10 hours

The before-and-after difference was stark: | Scenario | Before Tuning | After Tuning | |----------|---------------|--------------| | "If over 28°C, turn on AC" | Missing key functions | Perfect command execution | | "Turn off lights then TV" | Skipped second step | Flawless multi-step control |

The team credits the platform's end-to-end design: "We spent zero time on infrastructure and could focus entirely on improving our model's performance."

Key Points

- No-code customization makes AI tuning accessible to non-technical teams

- Cloud GPUs eliminate upfront hardware investments

- Visual tools replace opaque parameter files

- Real-world results show dramatic time and quality improvements

"For education, research, or business applications," notes one user, "this finally makes specialized AI practical for organizations without deep pockets or PhDs."

"For education, research, or business applications," notes one user, "this finally makes specialized AI practical for organizations without deep pockets or PhDs."