MiniMax and HUST Open-Source Game-Changing Visual AI Tech

Visual AI Gets a Major Upgrade Without Growing Pains

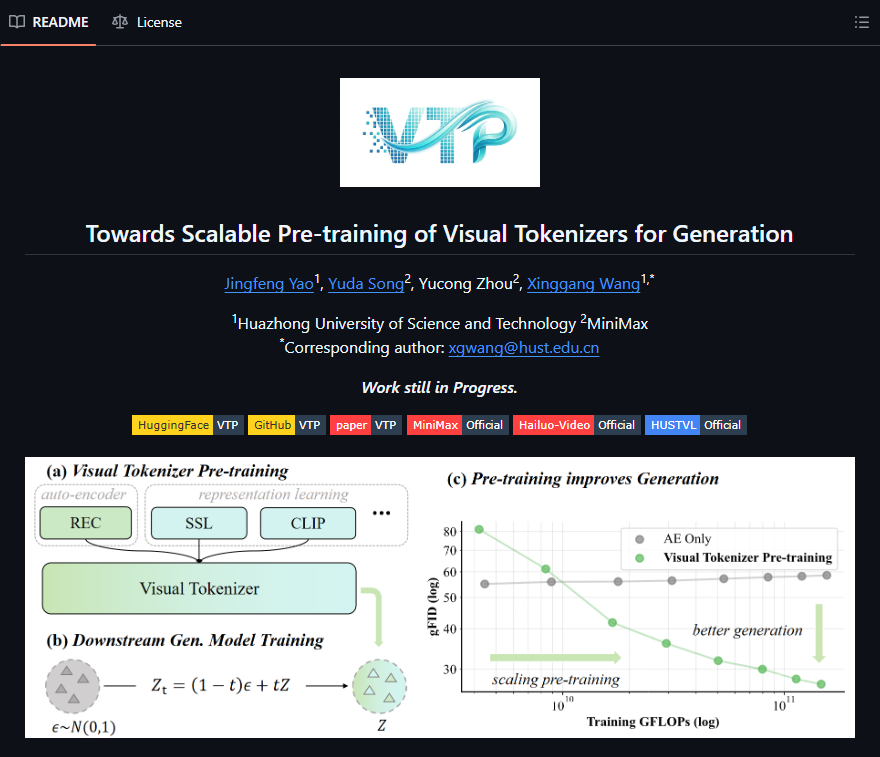

In a move that's shaking up artificial intelligence research, MiniMax has partnered with Huazhong University of Science and Technology to release VTP (Visual Tokenizer Pretraining) as open-source technology. What makes this development remarkable? It delivers staggering 65.8% improvements in image generation quality while leaving the core Diffusion Transformer (DiT) architecture untouched.

The Translator That Changed Everything

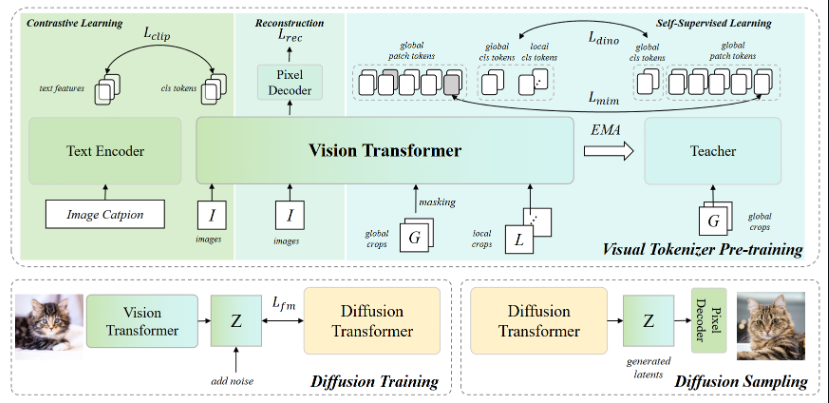

Imagine improving a car's performance not by adding horsepower but by refining its transmission system. That's essentially what VTP accomplishes for visual AI systems. Traditional approaches like DALL·E3 and Stable Diffusion3 focus on enlarging their main neural networks, but VTP takes a smarter path - optimizing how images get translated into the language AI understands.

The secret sauce lies in VTP's ability to create better "visual dictionaries" during pretraining. These optimized tokenizers produce representations that downstream systems find easier to work with, effectively letting existing DiT models punch well above their weight class.

More Than Just Better Numbers

VTP isn't just another incremental improvement - it represents a fundamental shift in how we think about scaling AI capabilities:

- It establishes the first theoretical framework linking tokenizer quality directly to generation performance

- Demonstrates clear "tokenizer scaling" laws similar to those observed in model size increases

- Opens new efficiency frontiers beyond the endless parameter arms race

The implications are profound. Instead of constantly demanding more computing power, future improvements might come from smarter preprocessing - potentially democratizing high-quality visual AI.

Open Source for Wider Impact

The research team isn't keeping this breakthrough locked away. They've released everything - code, pretrained models, and training methodologies - ensuring compatibility with existing DiT implementations. This means even small teams can potentially achieve results rivaling much larger competitors.

The timing couldn't be better as the industry shifts focus from pure scale to system-wide efficiency. VTP exemplifies how thoughtful engineering can sometimes outperform brute computational force.

Key Points:

- 66% boost achieved through tokenizer optimization alone

- No DiT modifications required - works with existing implementations

- Full open-source release lowers barriers to adoption

- Challenges assumptions about where performance gains must come from

- Potential paradigm shift toward more efficient AI development paths

The complete technical details are available in their research paper, with implementation code on GitHub.