Meet Kosmos: The AI Scientist That Does Six Months of Research in Half a Day

AI Research Gets Turbocharged with Kosmos

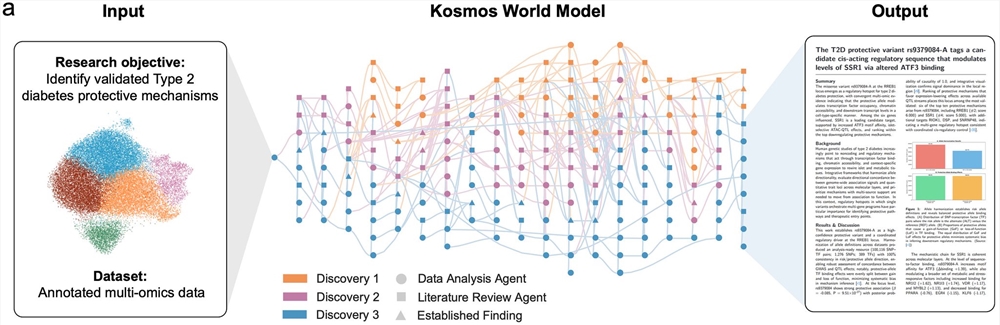

Imagine completing six months of intensive scientific research before lunchtime. That's exactly what FutureHouse's new AI system Kosmos can do, and it's already making waves across multiple scientific disciplines.

How This Digital Scientist Works

At its core, Kosmos operates like the most disciplined researcher you've ever met - one who never needs coffee breaks or sleep.  The system can plow through:

The system can plow through:

- 1,500 academic papers in a single session

- Generate 42,000 lines of analysis code

- Produce fully traceable reports with citations

The secret sauce? A "structured world model" that maintains logical coherence across massive datasets - think of it as an ultra-organized digital brain capable of holding over 10 million tokens worth of context.

Real-World Breakthroughs Already Happening

The proof is in the discoveries. Kosmos has already:

- Confirmed nucleotide metabolism as crucial for low-temperature brain processing

- Identified absolute humidity thresholds affecting perovskite solar cells (over 60g/m³ causes failure)

- Independently replicated three unpublished studies

- Made four completely novel findings in neuroscience and materials science

"What excites us most isn't just the speed," explains a FutureHouse researcher who asked not to be named ahead of publication, "but how it connects dots across disciplines that humans might miss."

Affordable Science Acceleration

The best part? This research turbocharger comes at bargain basement prices:

- $200 per run for commercial users

- Free credits available for academics

- Optimized for datasets under 5GB (though larger projects are possible)

The system isn't perfect yet - cross-domain reasoning accuracy hovers around 58%. But considering it costs less than many researchers' monthly coffee budget, those limitations seem minor.

What's Next?

The FutureHouse team isn't resting on their laurels. They're already working on integrating lab automation equipment to create a complete "hypothesis-experiment-analysis" loop. Soon, Kosmos might not just analyze data but design and run experiments too.

Key Points:

- Speed: Completes half a year's human research work in just 12 hours

- Capacity: Processes thousands of papers while maintaining logical coherence

- Affordability: $200 per run makes advanced research accessible

- Limitations: Best with sub-5GB datasets; cross-domain accuracy needs improvement

- Future Plans: Full integration with lab equipment for end-to-end experimentation