ManimML: AI Animation Tool Simplifies Transformer Visualization

ManimML: Bridging the Gap in AI Visualization

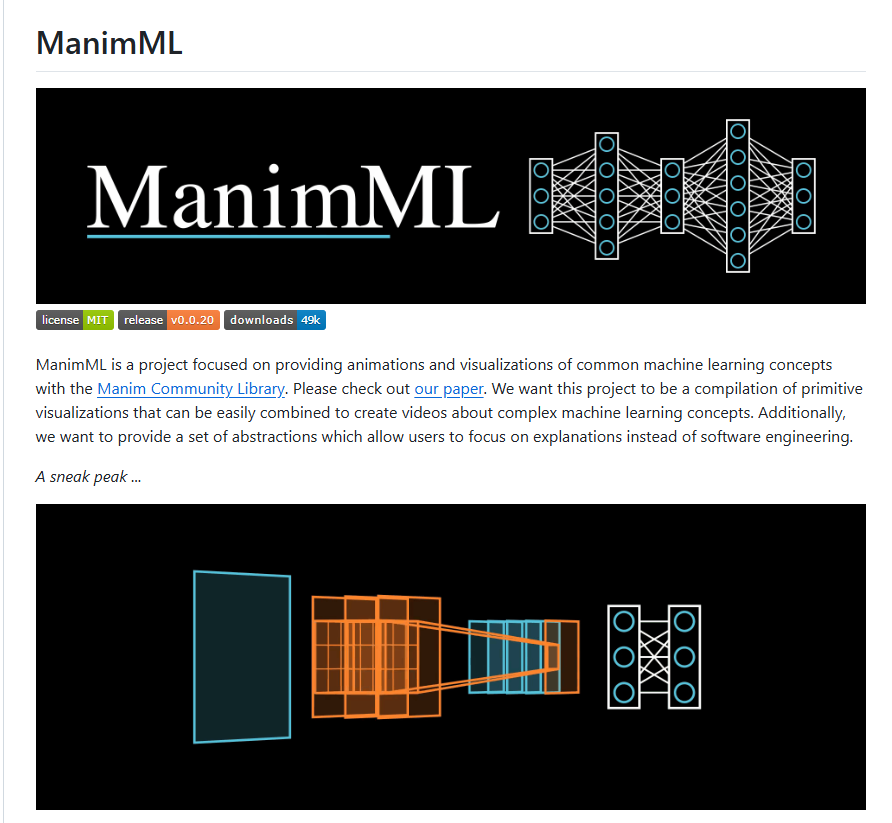

With artificial intelligence advancing rapidly, explaining complex models like the Transformer architecture has become a critical challenge. ManimML, an open-source animation library built on Python, is addressing this issue by turning abstract machine learning concepts into dynamic visualizations.

A New Standard for Technical Communication

Developed as an extension of the Manim community edition, ManimML specializes in creating animations for neural network architectures. Its capabilities extend beyond static diagrams—it produces interactive teaching materials that show algorithms in action. This approach has proven particularly valuable for illustrating:

- Transformer models

- Convolutional Neural Networks (CNNs)

- Forward/backward propagation processes

Intuitive Design for Maximum Impact

The library's breakthrough lies in its user-friendly interface modeled after popular deep learning frameworks like PyTorch. Developers can generate professional animations with just a few lines of code: ```python

Sample ManimML code for Transformer visualization

transformer = NeuralNetwork([ InputLayer(), AttentionLayer(), OutputLayer() ]) transformer.animate_forward_pass() ``` Remarkably, users can even generate custom animations by simply providing a GitHub repository link and natural language descriptions to the AI-powered system.

Industry Adoption and Recognition

Since its launch, ManimML has achieved significant milestones:

- 1,300+ GitHub stars

- 23,000+ PyPi downloads

- Hundreds of thousands of social media views for demo videos The tool received the Best Poster Award at IEEE VIS2023, cementing its reputation in the visualization community. Academics increasingly incorporate ManimML-generated content into research papers and conference presentations.

Transforming AI Education

The implications for education are profound:

- University lecturers use it to demonstrate algorithms dynamically

- Online course creators enhance engagement with animated examples

- Technical writers simplify complex concepts for broader audiences As the open-source community continues to expand ManimML's capabilities, it's poised to become an essential tool in democratizing AI understanding.

Key Points:

- Visualization breakthrough: Makes complex AI architectures accessible through animation

- Low barrier to entry: Python syntax familiar to ML practitioners reduces learning curve

- Proven adoption: Strong traction in both academic and developer communities

- Educational potential: Set to revolutionize how AI concepts are taught at all levels