Lightricks Unveils Open-Source AI That Creates Videos With Sound in Seconds

Lightricks Breaks New Ground With LTX-2 AI Video Generator

In a move that could democratize video creation, Lightricks has open-sourced its cutting-edge LTX-2 system - an AI that produces high-quality videos complete with synchronized audio in mere seconds. This breakthrough challenges conventional approaches by handling sight and sound simultaneously rather than sequentially.

How It Works: Seeing and Hearing Together

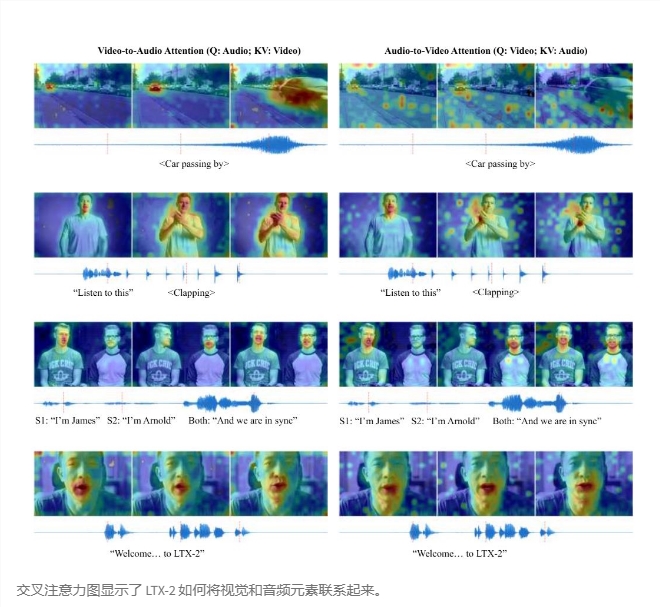

The secret lies in LTX-2's sophisticated dual-stream architecture. While most systems generate visuals first then add sound, this model mirrors real-world perception by processing both streams concurrently. With 19 billion parameters total (1.4B for video, 5B for audio), the asymmetric design reflects how humans naturally prioritize auditory information.

"Traditional methods create an artificial separation," explains the development team. "Our brains don't process a car crash visually then auditorily - we experience both together instantly."

Blazing Speed Meets Practical Applications

Performance tests reveal staggering efficiency:

- Generates 720p content at 1.22 seconds per step

- Operates 18x faster than comparable systems

- Handles 20-second sequences

- surpassing Google's benchmarks The system particularly shines when depicting cause-and-effect scenarios, like matching glass-breaking sounds precisely with visual shattering moments.

Why Open-Source Matters

Founder Ziv Faberman emphasizes accessibility: "Creators should control their tools, not depend on corporate gatekeepers." The decision to release LTX-2 publicly contrasts sharply with competitors' closed ecosystems.

The model does face some limitations:

- Occasional glitches with rare dialects or multi-speaker dialogue

- Challenges maintaining sync beyond 20 seconds But these hurdles seem minor compared to its transformative potential.

The complete framework is now available online, optimized for consumer-grade GPUs - meaning anyone with decent hardware can experiment with professional-grade audiovisual generation.

Key Points:

- Simultaneous processing of audio and visual streams mimics human perception

- Open-source model prioritizes creator control over walled gardens

- Remarkable speed: Generates HD clips faster than competitors

- Practical applications: Ideal for content creators needing quick, high-quality video production