JD Cloud's JoyBuilder Achieves Breakthrough in AI Training Speed

JD Cloud Accelerates AI Training with JoyBuilder Upgrade

The race to develop smarter artificial intelligence just got faster. JD Cloud's JoyBuilder platform has achieved what many thought impossible - reducing thousand-card training sessions from a grueling 15 hours down to just 22 minutes.

Behind the Speed Boost

What makes this breakthrough particularly impressive isn't just the raw numbers, but how engineers achieved it. The team implemented deep optimizations across the entire training pipeline:

- Data processing now happens asynchronously with GPU computations, eliminating wasteful waiting periods

- A custom-built parallel file system delivers staggering read speeds exceeding 400 GB/s

- The platform's 3.2T RDMA network ensures seamless communication between thousands of processing cards

"We didn't just tweak one component - we reimagined the entire workflow," explains a JD Cloud spokesperson. "From data ingestion to final model output, every step received careful optimization."

Why This Matters for AI Development

The implications extend far beyond bragging rights about processing speed. Faster training cycles mean:

- Researchers can iterate more quickly on new ideas

- Businesses can deploy AI solutions sooner

- Complex models that were previously impractical become feasible

The platform specifically shines when working with Vision-Language-Action (VLA) models, which combine multiple AI capabilities into unified systems.

Looking Ahead

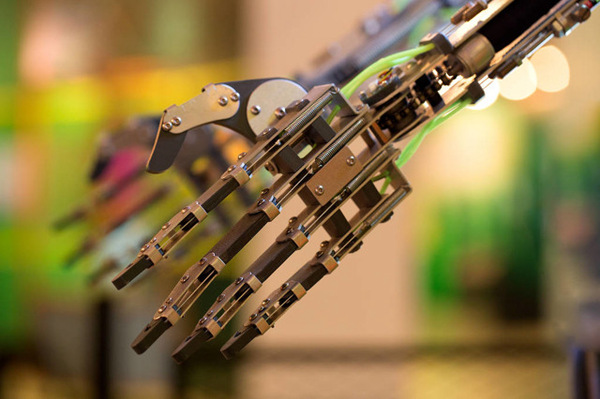

With this upgrade, JoyBuilder establishes itself as a serious contender in the competitive world of AI development platforms. Its support for the LeRobot framework positions it at the forefront of embodied intelligence research - where machines learn to interact with physical environments.

The team hints at even more innovations coming soon: "We're just getting started," says one engineer. "This is foundation work for what comes next."

Key Points:

- Training time reduced from 15 hours to 22 minutes

- 3.5x faster than open-source alternatives

- Supports cutting-edge GR00T N1.5 model

- Optimized for embodied intelligence applications