Apple's AI Paper Hits Snag: Benchmark Errors Trigger Late-Night Debugging Frenzy

Apple's Visual Reasoning Paper Requires Emergency Fix After Benchmark Errors Surface

The AI research community buzzed with controversy this week as flaws emerged in an Apple paper submitted to ICLR 2025. The study, which boldly claimed smaller models could surpass GPT-5's visual reasoning capabilities, now faces serious questions about its methodology.

The Discovery That Shook the Team

Lei Yang, a researcher at Jiechu Star, stumbled upon troubling inconsistencies while attempting to replicate the study's results. "At first I thought I must be doing something wrong," Yang admitted. "Then I realized the official code completely omitted crucial image inputs."

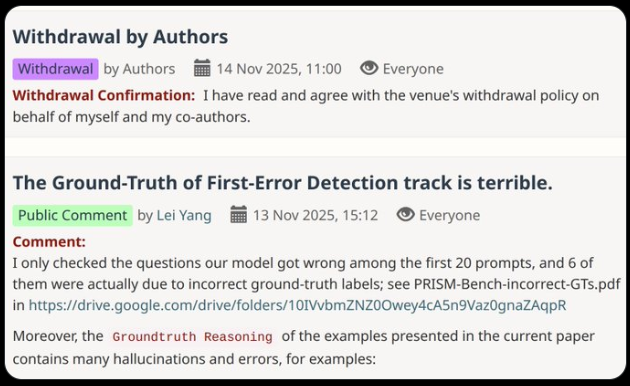

The problems didn't stop there. When Yang examined a sample of 20 test questions, he found six contained incorrect ground truth labels—an error rate suggesting nearly one-third of the benchmark data might be flawed.

Swift Response But Lingering Questions

Yang's GitHub issue initially received scant attention before being abruptly closed. Undeterred, he published a detailed critique that quickly went viral across academic circles. Within 24 hours, Apple's research team acknowledged "defects in the data generation process" and rushed out corrected benchmarks.

The incident highlights growing pains in AI research methodology:

- Automated dataset generation without proper validation checks

- Pressure to demonstrate breakthroughs against larger models

- The human cost when errors slip through—countless hours wasted replicating flawed work

"Before you burn midnight oil on replication," Yang advises fellow researchers, "run a quick diagnostic check first."

The episode serves as a cautionary tale about maintaining rigorous standards even amid fierce competition to push boundaries in artificial intelligence.

Key Points:

- Apple paper claimed small models beat GPT-5 at visual reasoning tasks

- Independent researcher found missing code components and labeling errors affecting ~30% of benchmark data

- Findings prompted urgent corrections from original authors

- Incident sparks debate about quality control in AI research methodologies