Ant Bank Open-Sources High-Performance AI Model Ring-flash-2.0

Ant Bank Unveils Open-Source AI Powerhouse: Ring-flash-2.0

In a significant move for the AI development community, Ant Bank's Bai Ling research team has open-sourced its cutting-edge reasoning model Ring-flash-2.0. This high-performance artificial intelligence system represents a major leap forward in efficient large language model architecture.

Technical Breakthroughs

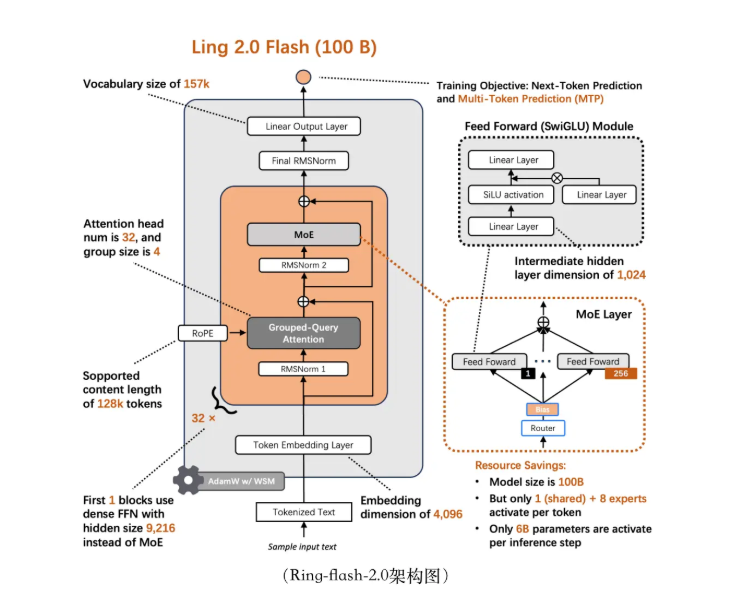

The model builds upon its predecessor Ling-flash-2.0-base with several key innovations:

- Parameter Efficiency: While containing 10 billion total parameters, Ring-flash-2.0 activates just 610 million parameters per inference through an advanced activation mechanism

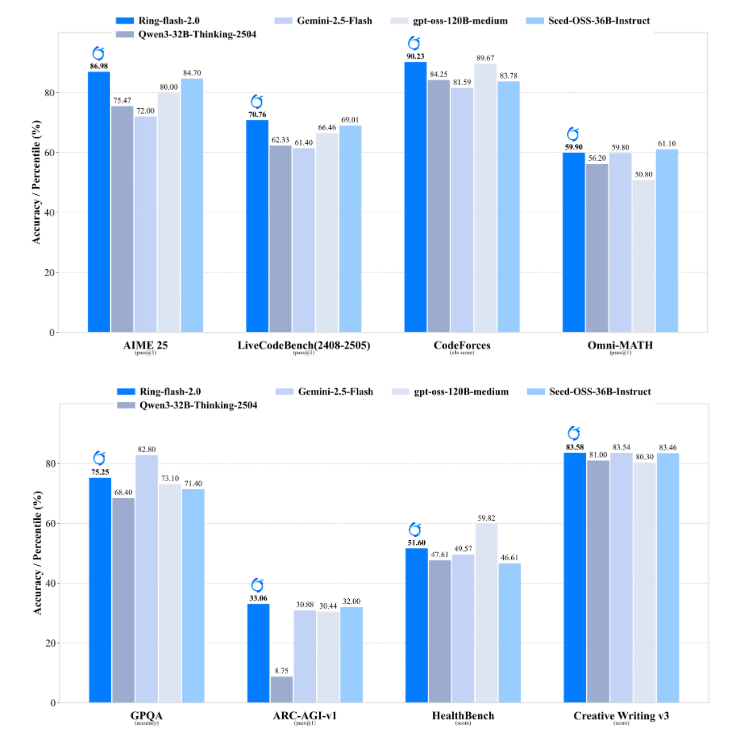

- Benchmark Dominance: Testing shows superior performance versus comparable 4B parameter models and competitive results against larger sparse MoE architectures

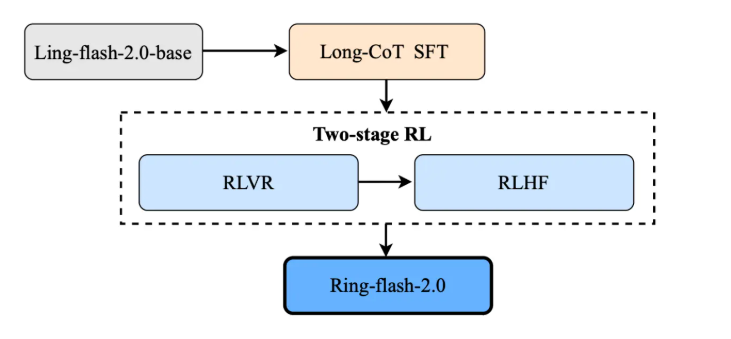

- Training Innovation: Features a novel two-phase reinforcement learning approach combining Long-CoT SFT with RLVR and RLHF methodologies

Performance Highlights

The model demonstrates exceptional capability across multiple challenging domains:

- Mathematical Reasoning: Solves complex quantitative problems with high accuracy

- Code Generation: Produces functional programming solutions across multiple languages

- Logical Analysis: Excels at multi-step deduction and abstract problem-solving

The research team notes these capabilities allow Ring-flash-2.0 to compete with some closed-source commercial AI APIs currently dominating the market.

Open-Source Commitment

In an unusual show of transparency for financial sector AI development, Ant Bank is releasing:

- Complete model weights

- Reinforcement learning training protocols

- Data preparation methodologies

The materials are available through leading AI repositories Hugging Face and ModelScope.

Future Implications

The release signals growing competition in efficient large language models between tech giants and financial institutions. Experts suggest this could accelerate:

- Wider adoption of sparse activation architectures

- New commercial applications requiring precise reasoning

- Academic research into financial-focused AI systems

The Ant Bai Ling team anticipates widespread experimentation with Ring-flash-2.0 across both enterprise and research environments.

Key Points:

- Resource Efficiency: Only 6% of total parameters activated per query reduces computational costs significantly

- Training Innovation: Two-phase RL approach combines supervised fine-tuning with verifiable rewards

- Open Access: Full technical specifications available to public researchers

- Performance Parity: Competes favorably against proprietary systems despite smaller active parameter count