Amazon Develops AI Glasses for Delivery Drivers

Amazon Tests AI Smart Glasses for Delivery Drivers

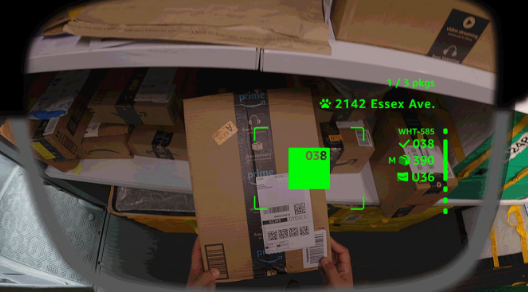

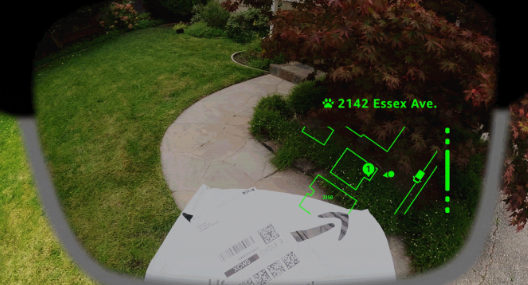

Amazon announced on Wednesday the development of AI-powered smart glasses tailored for its delivery personnel. The wearable technology aims to streamline logistics by enabling hands-free package scanning, route navigation, and delivery confirmations—reducing reliance on handheld devices.

How the Smart Glasses Work

The glasses combine AI sensing, computer vision, and an embedded camera system to provide real-time updates on:

- Delivery task status

- Road hazards

- Environmental conditions

Upon arrival at a destination, the glasses automatically activate, helping drivers locate packages within their vehicle and navigate complex locations like multi-unit apartments or commercial buildings.

Key Features

- Controller Integration: Paired with a vest-mounted device featuring operational buttons, swappable batteries, and an emergency assist function.

- Adaptive Display: Auto-dimming lenses adjust to lighting conditions; prescription customization available.

- Future Upgrades: Planned "real-time defect detection" will alert drivers to misdeliveries or potential risks (e.g., pets, low-light areas).

Broader Logistics Innovations

The announcement coincided with two other Amazon initiatives:

- Blue Jay: A collaborative robotic arm for warehouse operations.

- Eluna: An AI analytics tool optimizing warehouse workflows.

The company is currently field-testing the glasses in North America before a wider rollout.

Key Points

- Hands-free operation enhances driver safety and efficiency.

- Combines navigation assistance with environmental hazard detection.

- Part of Amazon’s expanding smart logistics ecosystem.