Tsinghua's TurboDiffusion Shatters Speed Barriers in AI Video Creation

Tsinghua's Breakthrough Brings AI Video Generation Into Instant Territory

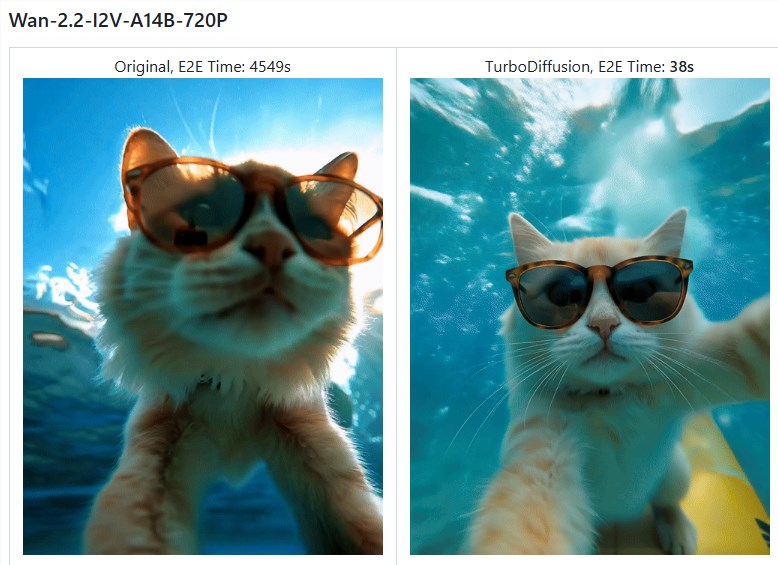

The world of AI-generated video just got dramatically faster. Researchers from Tsinghua University's TSAIL Lab, collaborating with Shengshu Technology, have unveiled TurboDiffusion - an open-source framework that slashes processing times while maintaining impressive visual quality.

How It Works: The Tech Behind the Speed

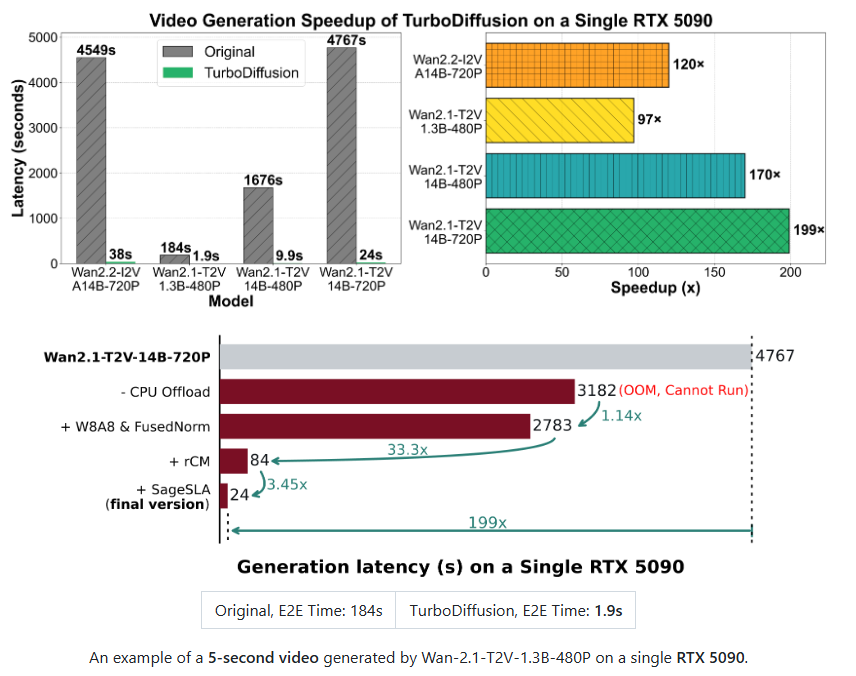

The secret sauce combines SageAttention with SLA (sparse linear attention mechanism), cutting computational costs significantly when handling high-resolution footage. But the real game-changer is rCM (temporal step distillation) technology, which reduces sampling steps dramatically while preserving visual consistency.

Performance That Speaks Volumes

The numbers tell an astonishing story:

- A 5-second clip that previously took 184 seconds now renders in just 1.9 seconds on RTX5090 hardware

- Complex 720P projects shrink from grueling 1.2-hour waits to mere 38-second sprints

- Across various benchmarks, speed improvements consistently hit the 100-200x range

"This isn't just incremental progress," explains Dr. Liang Zhao from TSAIL Lab. "We're fundamentally changing what's possible with consumer-grade hardware."

Democratizing High-Speed Creation

What makes TurboDiffusion particularly exciting is its accessibility:

- Available now as open-source software on GitHub

- Optimized versions for both consumer GPUs (RTX4090/5090) and industrial-grade H100 systems

- Includes quantized models for memory-efficient operation on varied hardware setups

The implications are profound - individual creators can now experiment freely without render-time headaches, while studios gain unprecedented production efficiency.

github:https://github.com/thu-ml/TurboDiffusion

Key Points:

- ⚡ Lightning Processing: Turns hours into seconds for AI video generation

- 🛠️ Smart Compression: Maintains quality while radically reducing compute needs

- 🌐 Hardware Flexibility: Runs efficiently across consumer and professional GPUs alike