IBM Unveils Granite 4.0 Nano AI Models for Edge Computing

IBM Introduces Granite 4.0 Nano AI Models

IBM has unveiled its latest innovation in artificial intelligence with the release of four new Granite 4.0 Nano models, marking a significant advancement in efficient, small-scale AI deployment. These models feature parameter sizes ranging from 3.5 million to 1.5 billion, demonstrating IBM's commitment to making AI more accessible and practical for diverse applications.

Breaking Free from Cloud Dependency

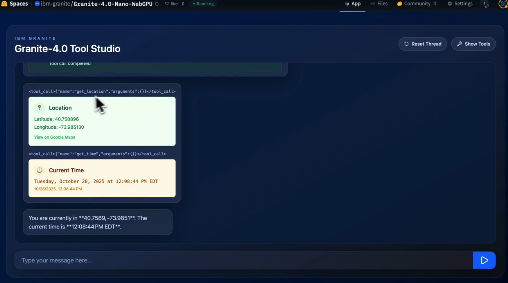

Unlike traditional large language models that typically require cloud computing infrastructure, the Granite 4.0 Nano series is designed to operate on standard laptops and even within browser environments. This breakthrough enables developers to create applications for consumer hardware and edge devices without relying on cloud services.

Open-Source Accessibility

All Granite 4.0 Nano models are released under the Apache 2.0 license, making them available for:

- Academic researchers

- Enterprise developers

- Independent software creators The license permits commercial use and ensures compatibility with popular tools including:

- llama.cpp

- vLLM

- MLX

The models have also received ISO42001 certification for responsible AI development practices.

Model Variants and Architectures

The Granite 4.0 Nano family comprises four distinct models:

- Granite-4.0-H-1B (~150M parameters)

- Granite-4.0-H-350M (~3.5M parameters)

- Granite-4.0-1B (~200M parameter variant)

- Granite-4.0-350M (variant model)

The H-series utilizes a hybrid state space architecture optimized for low-latency edge environments, while standard transformer models offer broader compatibility across platforms.

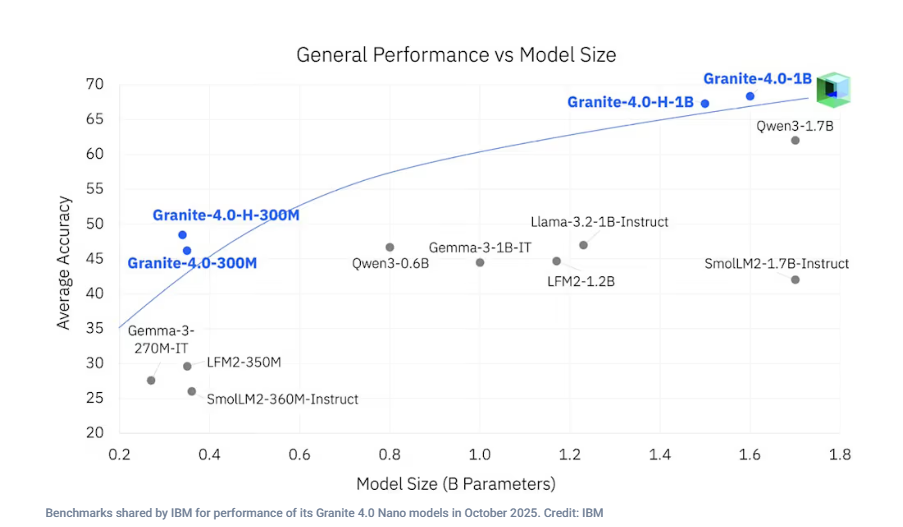

Performance Benchmarks Show Promise

Recent testing reveals that IBM's Nano family outperforms competing small language models across multiple metrics:

- Superior instruction following capabilities

- Enhanced function calling performance

- Reduced memory requirements

- Faster runtime speeds These efficiencies allow smooth operation on mobile devices and standard CPUs. IBM has actively engaged with developer communities through platforms like Reddit to gather feedback and discuss future enhancements. For technical details: HuggingFace Blog # Key Points: 🌟 Local Processing Power: Granite4Nano enables AI applications on consumer hardware without cloud dependency. 🛠️ Open Licensing: Apache2 license supports both research and commercial implementations. 📈 Benchmark Leader: Outperforms comparable small language models in speed and efficiency.