Gemini's Veo 3.1 Now Crafts Videos from Multiple Images

Google's Gemini Takes Video Generation to New Heights

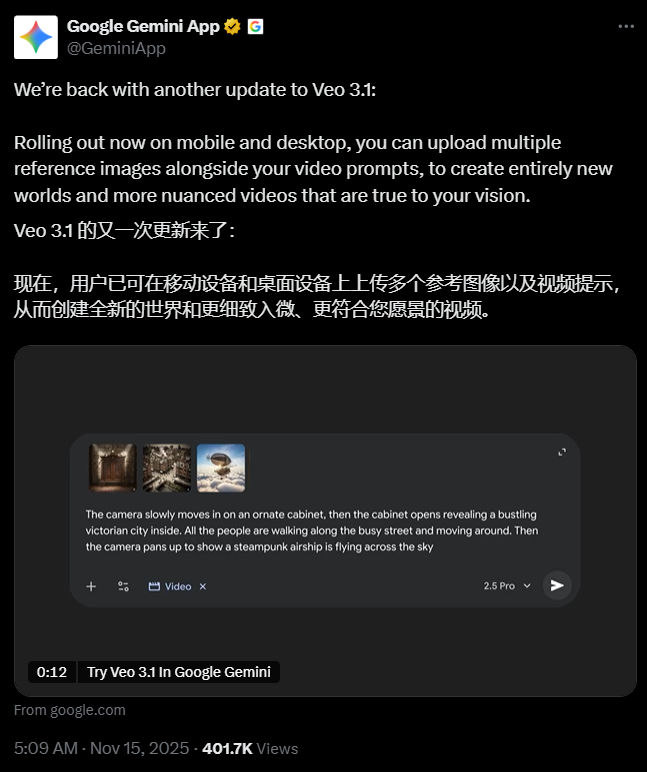

Google's Gemini platform just got more creative with the launch of Veo 3.1 for Pro and Ultra subscribers. This latest update introduces groundbreaking capabilities that transform how we think about AI-generated video content.

Ingredients for Digital Storytelling

The standout feature? A novel "Ingredients to Video" mode that works like a digital blender for visual elements. Users can now upload three reference images simultaneously:

- Character portraits (like selfies from different angles)

- Background scenes (such as futuristic cityscapes)

- Style references (including famous painting techniques)

The system then extracts key features from each image and synthesizes them into polished 8-second videos at full HD resolution.

Behind the Scenes Magic

Early demonstrations showcase Veo 3.1's impressive capabilities. One test combined:

- Multiple angle selfies

- A cyberpunk city backdrop

- An impressionist oil painting style reference The result? A seamless short film titled "Impressionist Future Street Walk" where facial features remained perfectly consistent throughout.

The technology doesn't stop at visuals either. Generated videos include:

- Native ambient soundtracks

- Precise control over opening/closing frames

- Options for extending existing clips All protected by Google's SynthID invisible watermarking technology.

Access and Availability

Good news for current subscribers - Google confirms the multi-image reference feature comes at no additional cost beyond existing plan limits. While generation quotas remain unchanged, the creative possibilities have expanded exponentially.

The web and mobile interfaces maintain their user-friendly design, allowing one-click generation from text prompts while handling all the complex synthesis behind the scenes.

Key Points:

- Multi-image synthesis: Combine character, scene, and style references in one generation

- Technical polish: Maintains consistent lighting and character details frame-to-frame

- Creative control: Offers first/last frame editing and video extension options

- Seamless integration: Works across web and mobile platforms with existing subscriptions