ByteDance's USO Model Unifies Style and Theme in AI Images

ByteDance Bridges AI's Style-Theme Divide with USO Model

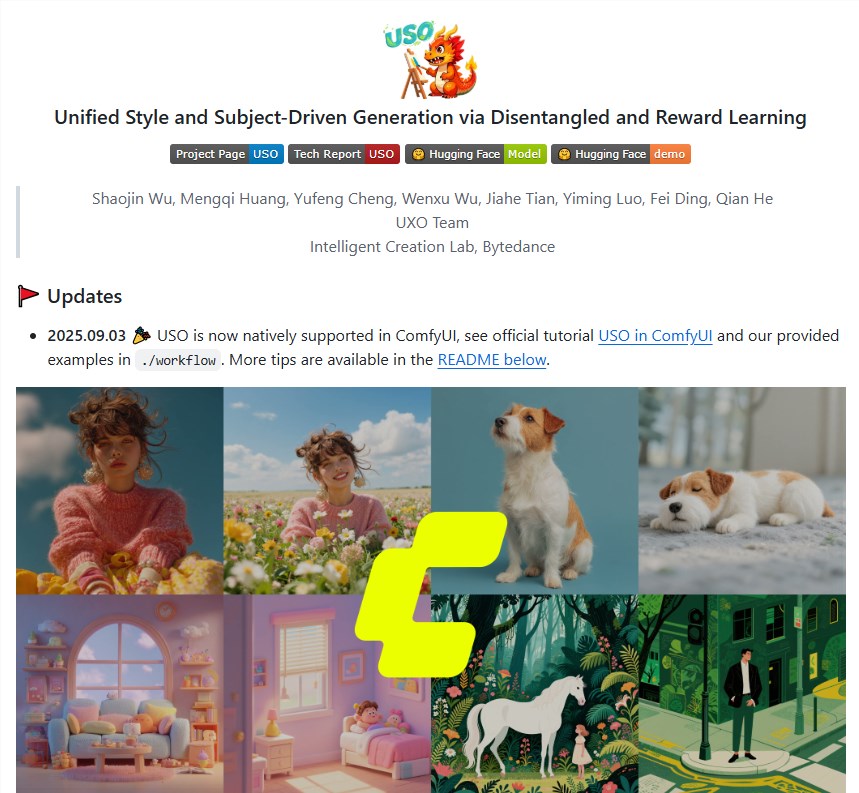

In a significant advancement for AI-generated imagery, ByteDance's Intelligent Creation Lab has developed the USO (Unified Style-Theme Optimization) model, resolving what experts considered an inherent tension between stylistic consistency and thematic accuracy.

The Core Innovation

Traditional AI image generation treated style replication and content preservation as separate challenges. ByteDance researchers addressed this through:

Traditional AI image generation treated style replication and content preservation as separate challenges. ByteDance researchers addressed this through:

- A 200,000-image triplet dataset (style reference + content reference + stylized target)

- Two-phase training: Initial style learning via advanced encoders, followed by content integration

- Style Reward Learning (SRL): Reinforcement mechanism prioritizing stylistic fidelity

Technical Breakthroughs

The model's architecture demonstrates several engineering feats:

- Decoupled Learning: Style and content processing occur independently before synthesis

- Benchmark Dominance: Outperformed competitors on USO-Bench (ByteDance's evaluation platform)

- Commercial Scalability: Maintains brand consistency across diverse marketing contexts

Open-Source Strategy

ByteDance has made USO fully accessible via:

- GitHub repository

- Hugging Face demo This move accelerates adoption across digital art studios, advertising agencies, and indie developers.

Key Points:

- 🖌️ Style-Content Synergy: First model to optimize both artistic style and thematic elements simultaneously

- 📈 Data-Driven Approach: Massive curated dataset enables nuanced stylistic understanding

- 🌐 Industry Impact: Potential applications span concept art generation to automated ad production