Ant Afu Clears the Air: Health Q&A Results Free from Ads and Commercial Influence

Ant Afu Takes Stand Against Commercialization in Health Tech

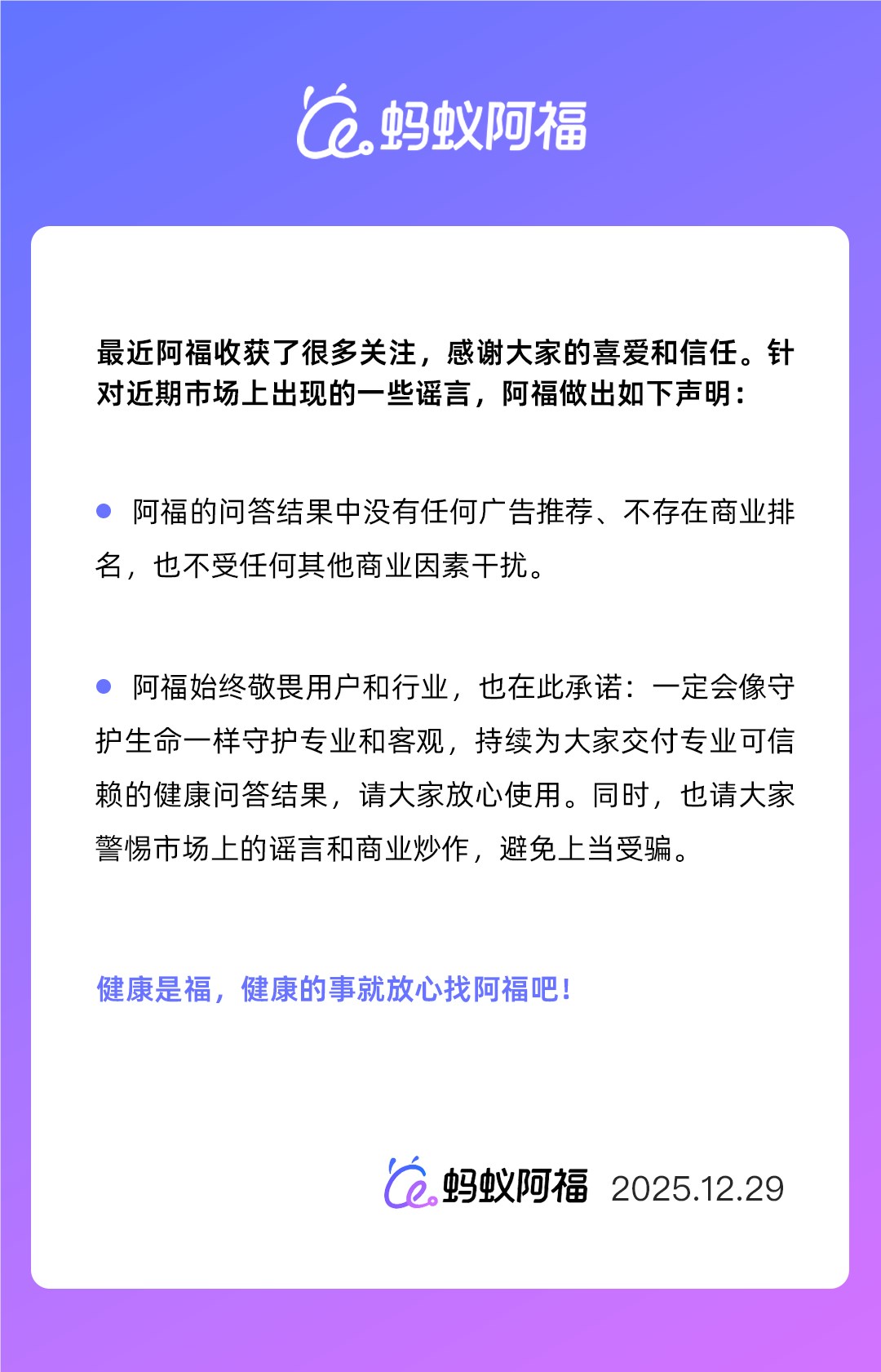

In an era where digital health platforms increasingly blur the lines between medical advice and marketing, Ant Afu has drawn a clear boundary. The AI-powered health application issued a firm declaration on December 29th addressing user concerns about potential commercialization of its services.

No Ads, No Rankings - Just Answers

The statement leaves no room for ambiguity: "There are no advertisement recommendations in Afu's Q&A results, no commercial rankings, and no interference from other commercial factors." This direct approach resonated with users tired of navigating sponsored content disguised as medical advice.

Protecting Professional Integrity

Ant Afu emphasized its commitment to maintaining professional objectivity, comparing it to safeguarding human life itself. "We respect both our users and the healthcare industry too much to compromise our results," the announcement stated. The company positioned itself as an outlier in an environment where many health apps quietly monetize user queries.

The timing appears strategic. Recent controversies surrounding paid placements in competing platforms have left consumers wary. One user comment captured the prevailing sentiment: "Finally! A health service I can trust without wondering who paid for these answers."

User Vigilance Encouraged

While celebrating its own ad-free model, Ant Afu cautioned users against market misinformation. The statement urged discernment when encountering sensational health claims elsewhere - subtle commentary on competitors' practices.

The announcement sparked lively discussions across social media platforms. Many praised the transparency while others questioned how sustainable such models remain in today's tech landscape.

Key Points:

- Ad-free guarantee: Ant Afu confirms zero advertisements in Q&A responses

- No commercial influence: Results aren't affected by payments or partnerships

- Professional focus: Company prioritizes medical accuracy over monetization

- User reception: Positive response highlights demand for unbiased health information