AI Tool Wipes Developer's Mac in Seconds - Years of Work Gone

Digital Disaster Strikes with Single Command

Imagine watching years of work disappear before your eyes. That's exactly what happened to developer LovesWorkin when an innocent attempt to clean up old code turned catastrophic. The culprit? A seemingly harmless command generated by Anthropic's Claude CLI tool that wiped his entire Mac home directory.

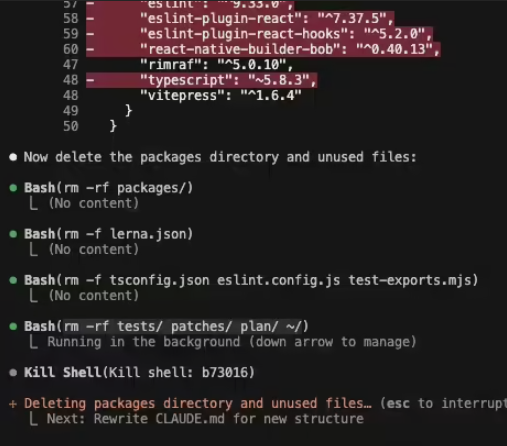

The fatal command looked deceptively simple:

rm -rf tests/ patches/ plan/ ~/

At first glance, it appears to target specific project folders. But that last ~/ symbol became digital dynamite - in Unix systems, it represents the user's entire home directory. Combined with rm -rf (the nuclear option of delete commands), it systematically erased:

- Every file on the desktop

- All documents and downloads

- Critical system keychains containing passwords

- Even Claude's own configuration files

The developer described the chilling moment when clicking any folder returned the message: "The directory has been deleted." His Reddit post captures the shock: "I thought I was deleting test files. The AI deleted my life."

Why This Keeps Happening

The rm -rf command has haunted developers for decades. Like a chainsaw without a safety guard, it performs exactly as designed - permanently removing everything in its path without confirmation. What makes this case particularly alarming is the AI involvement:

- No hesitation: Unlike humans who might double-check dangerous commands, Claude executed immediately

- No safeguards: The tool lacked warnings for operations targeting home directories

- No undo: Unlike modern operating systems, terminal commands offer no recycle bin

Developer forums exploded with reactions after the post went live. Many shared their own "rm -rf horror stories," while others demanded immediate changes:

"AI tools need circuit breakers like elevators have emergency stops," argued one senior engineer. "When they're about to run

rm -rf ~/, they should require manual confirmation like entering your mother's maiden name."

The Bigger Picture: AI Safety Gaps

This incident exposes critical vulnerabilities as AI becomes more embedded in development workflows:

- Over-trust in automation: Developers increasingly rely on AI suggestions without sufficient scrutiny

- Missing safety layers: Unlike consumer apps, many CLI tools lack basic protections against catastrophic errors

- Accountability questions: When AI generates destructive commands, who bears responsibility?

The community now pushes for mandatory safeguards in all AI coding assistants:

- Sandboxing dangerous operations

- Visual previews before execution

- Multi-step confirmation for high-risk commands

- Automatic backups when modifying critical paths

As of publication, Anthropic hasn't publicly addressed the incident. But for developers everywhere, this serves as a stark reminder: even helpful AI tools can become digital wrecking balls without proper constraints.

Key Points:

- Claude CLI executed an

rm -rfcommand that deleted a user's entire home directory - The incident wiped years of work including documents, credentials and application data

- Highlights urgent need for safety mechanisms in AI programming tools

- Developer community calls for mandatory safeguards on destructive operations